Differential privacy is one of those ideas that sound impossible. It is a mechanism outputting information about an underlying dataset while guaranteeing against identification attempts by any means for the individuals in the data [1]. In a time when big data is so hyped on one hand and data breaches seem rampant, why aren't we hearing more about differential privacy (DP)?

I quote Moritz Hardt from his blog:

To be blunt, I think an important ingredient that’s missing in the current differential privacy ecosystem is money. There is only so much that academic researchers can do to promote a technology. Beyond a certain point businesses have to commercialize the technology for it be successful.

So what is differential privacy? First of all, DP is a constraint. If your data release mechanism can satisfy that constraint, then you can be assured that your data is safe from de-anonymization. DP came out of Microsoft Research initially and it's been applied in many different ways. There are DP implementations for machine learning algorithms, data release, etc. Here's an explain-like-I'm-5 description courtesy of Google Research Blog on their RAPPOR project, which is based off of DP.

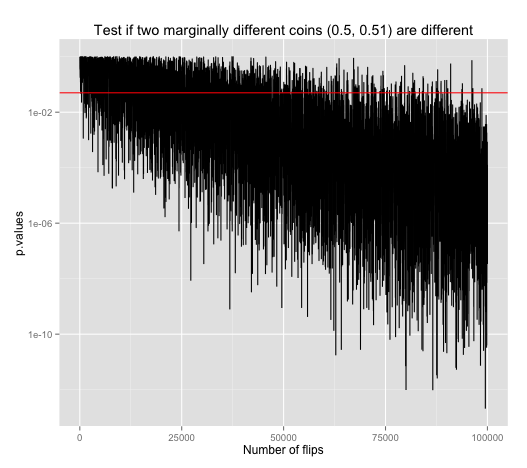

To understand RAPPOR, consider the following example. Let’s say you wanted to count how many of your online friends were dogs, while respecting the maxim that, on the Internet, nobody should know you’re a dog. To do this, you could ask each friend to answer the question “Are you a dog?” in the following way. Each friend should flip a coin in secret, and answer the question truthfully if the coin came up heads; but, if the coin came up tails, that friend should always say “Yes” regardless. Then you could get a good estimate of the true count from the greater-than-half fraction of your friends that answered “Yes”. However, you still wouldn’t know which of your friends was a dog: each answer “Yes” would most likely be due to that friend’s coin flip coming up tails.

Googles Chrome uses RAPPOR to collect some sensitive data that even Google doesn't want to store because of end-user privacy risks [2]. With the use of DP, they're able to get access to some useful data that they wouldn't have been able to otherwise.

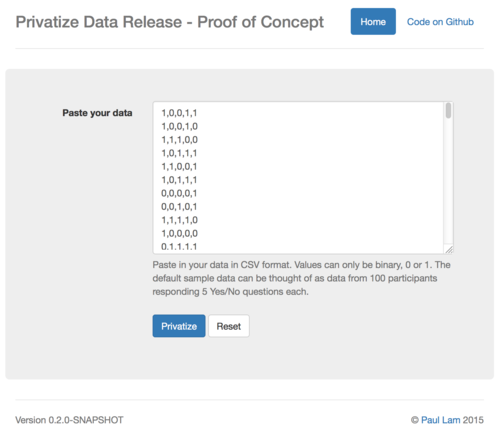

By this point, I hope you have a sense of what DP is and why it's useful. But how does it work? Luckily, I found out that Moritz open sourced his MWEM algorithm on Github. Then I spent a couple weekends deploying his Julia package and built a web application around it.

The site is live at masking.io (note the unsecure HTTP). Give it a try! It doesn't do much yet though.

masking.io is a weekend hack so I'm not sure if I'll do anything more with it. Email me if you think this can be useful to you. For now the app only takes binary values. So pretend your data are all Yes/No responses. The app can be patched to take in any numeric data. Moritz describes how to do that in his paper [3] and he's open to sharing his existing C# code for reference.

The way the web application works is by exposing Moritz's package as a Restful API using Morsel.jl. The frontend is done with Clojurescript's Reagent. I couldn't find any PaaS that can run Julia applications so I containerized the Julia part in Docker and deployed it. That was a bit annoying to do as I kept finding bugs and had to submit a few patches on the way. I guess not many people are deploying Julia applications yet.

The whole stack is open sourced: web application, DP micro-service, and MWEM algorithm. Let me know of your thoughts.

References:

- Ji, et al., Differential Privacy and Machine Learning: a Survey and Review, arXiv:1412.7584 [cs.LG]

- Erlingsson, et al., RAPPOR: Randomized Aggregatable Privacy-Preserving Ordinal Response, arXiv:1407.6981 [cs.CR]

- Hardtz, et al., A simple and practical algorithm for differentially private data release, arXiv:1012.4763 [cs.DS]

With thanks to Chris Diehl for bringing DP to my attention.